regularization machine learning meaning

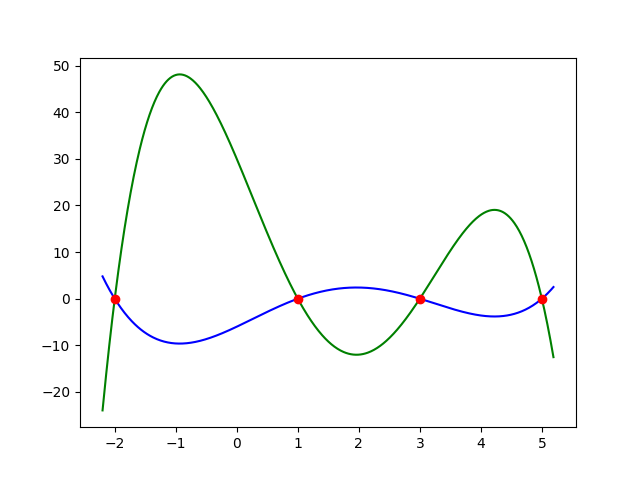

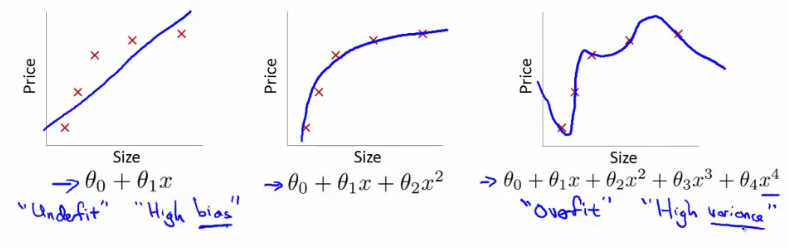

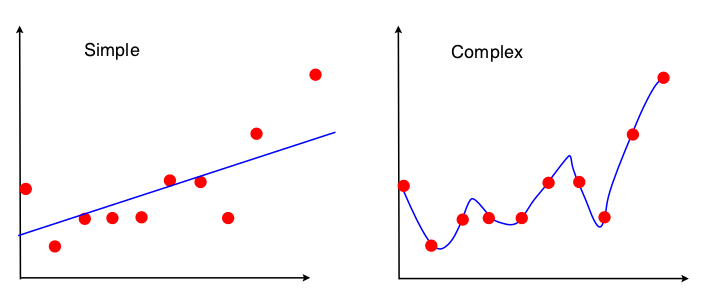

In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. This intercept is a quantification of the mean value of.

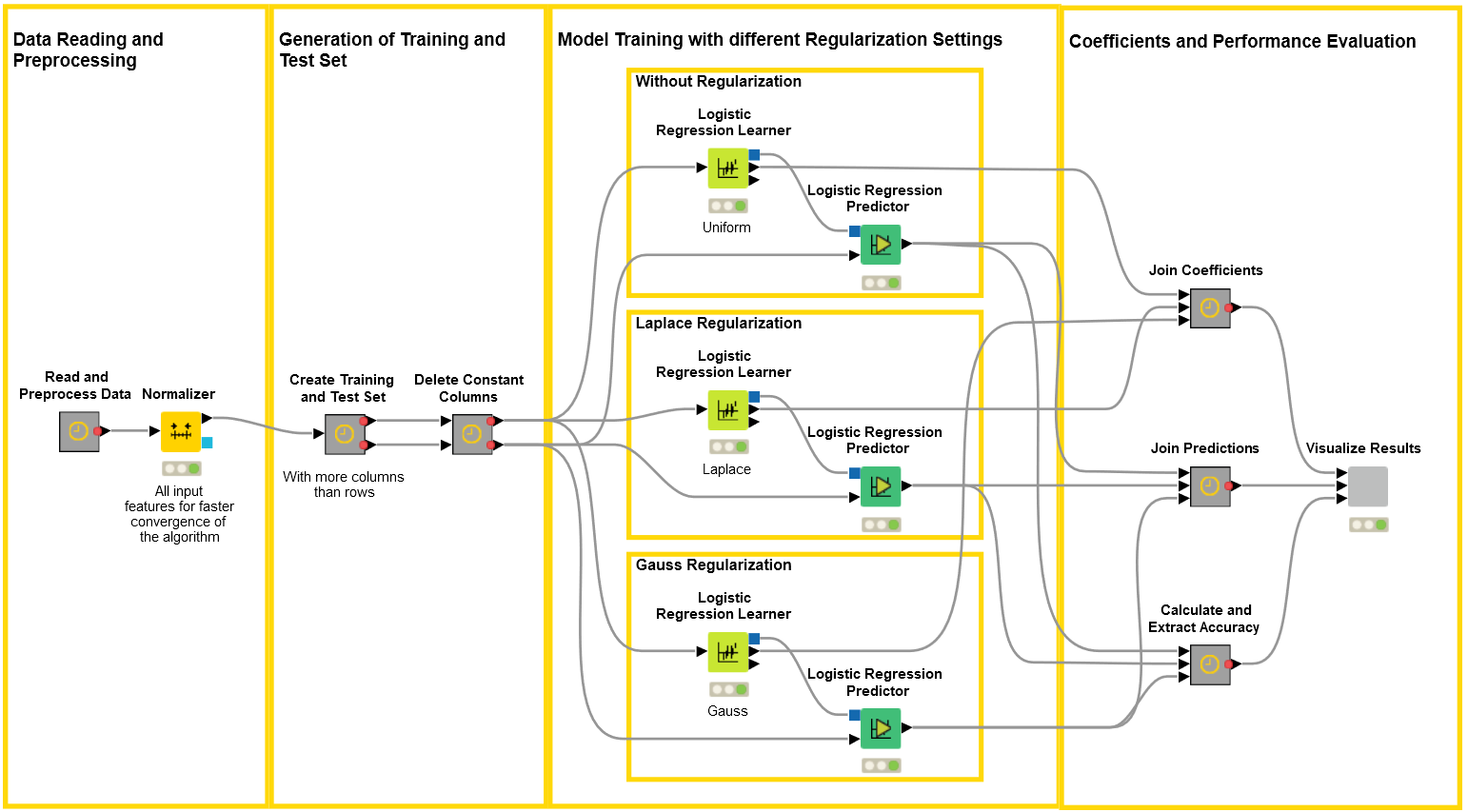

Understanding Regularization For Logistic Regression Knime

It is a technique to prevent the model from overfitting by adding extra information to it.

. It is one of the most important concepts of machine learning. Solve an ill-posed problem a problem without a unique and stable solution Prevent model overfitting In machine learning. In general regularization involves augmenting the input information to enforce generalization.

In the context of machine learning regularization is the process that regularizes or shrinks the coefficients toward zero. It is also considered a process of. A penalty or complexity term is added to the complex model during regularization.

L2 Machine Learning Regularization uses Ridge regression which is a model tuning method used for analyzing data with multicollinearity. LoginAsk is here to help you access Machine Learning L1 Regularization. Technically regularization avoids overfitting by adding a penalty to the models loss function.

Regularization Loss Function Penalty. The concept of regularization is widely used even outside the machine learning domain. Machine Learning L1 Regularization will sometimes glitch and take you a long time to try different solutions.

The formal definition of regularization is as follows. In Lasso regression the model is. Regularization is one of the techniques that is used to control overfitting in high flexibility models.

The regularization parameter in machine learning is λ and has the following features. What is Regularization. While regularization is used with many different machine learning.

It tries to impose a higher penalty on the variable having higher values and hence it controls the. There are three commonly used. Definition Regularization is the method used to reduce the error by fitting a function appropriately on the given training set while avoiding overfitting of the model.

Regularization is one of the most important concepts of machine learning. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero. Also known as Ridge Regression it adjusts models with overfitting or underfitting by adding a penalty equivalent to the sum of the squares of the.

In mathematics statistics finance 1 computer science particularly in machine learning and inverse problems regularization is a process that changes the result answer to be simpler. How Does Regularization Work. Lets consider the simple linear regression equation.

This technique prevents the model from overfitting by adding extra information to it. Regularization methods add additional constraints to do two things.

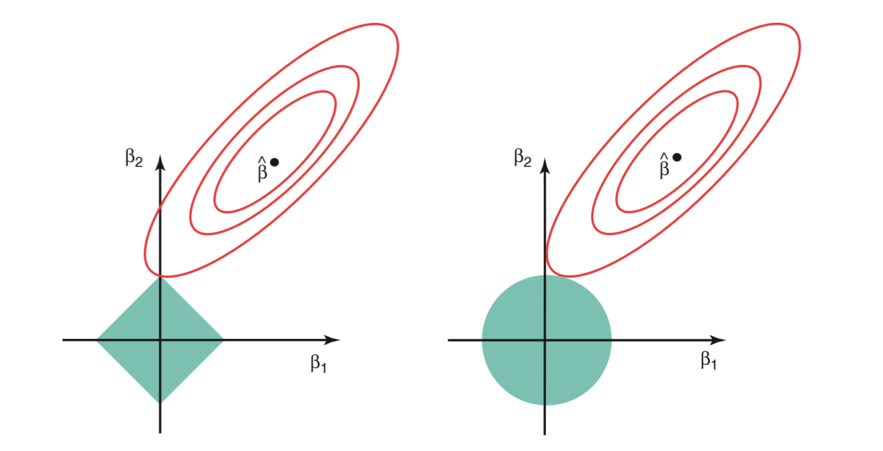

Fighting Overfitting With L1 Or L2 Regularization Which One Is Better Neptune Ai

What Is Machine Learning Regularization For Dummies By Rohit Madan Analytics Vidhya Medium

Understanding Regularization In Plain Language L1 And L2 Regularization Regenerative

What Is Regularization In Machine Learning Quora

What Is Regularization In Machine Learning Quora

What Is Overfitting In Deep Learning 10 Ways To Avoid It

Differences Between L1 And L2 As Loss Function And Regularization

Neural Networks And Deep Learning

Regularization In Machine Learning Machine Learning Career Launcher

Advice For Applying Machine Learning Regularization And Bias Variance 王彩旗edwardwangcq Com的博客 Csdn博客

What Is Regularization In Machine Learning By Kailash Ahirwar Codeburst

Regularization Techniques Regularization In Deep Learning

Regularization Techniques In Deep Learning Kaggle

Regularization In Machine Learning By Prashant Gupta Towards Data Science

What Are The Main Regularization Methods Used In Machine Learning Quora

Regularization An Important Concept In Machine Learning By Megha Mishra Towards Data Science